Telemetric Robot Hand

Building a system from the ground up

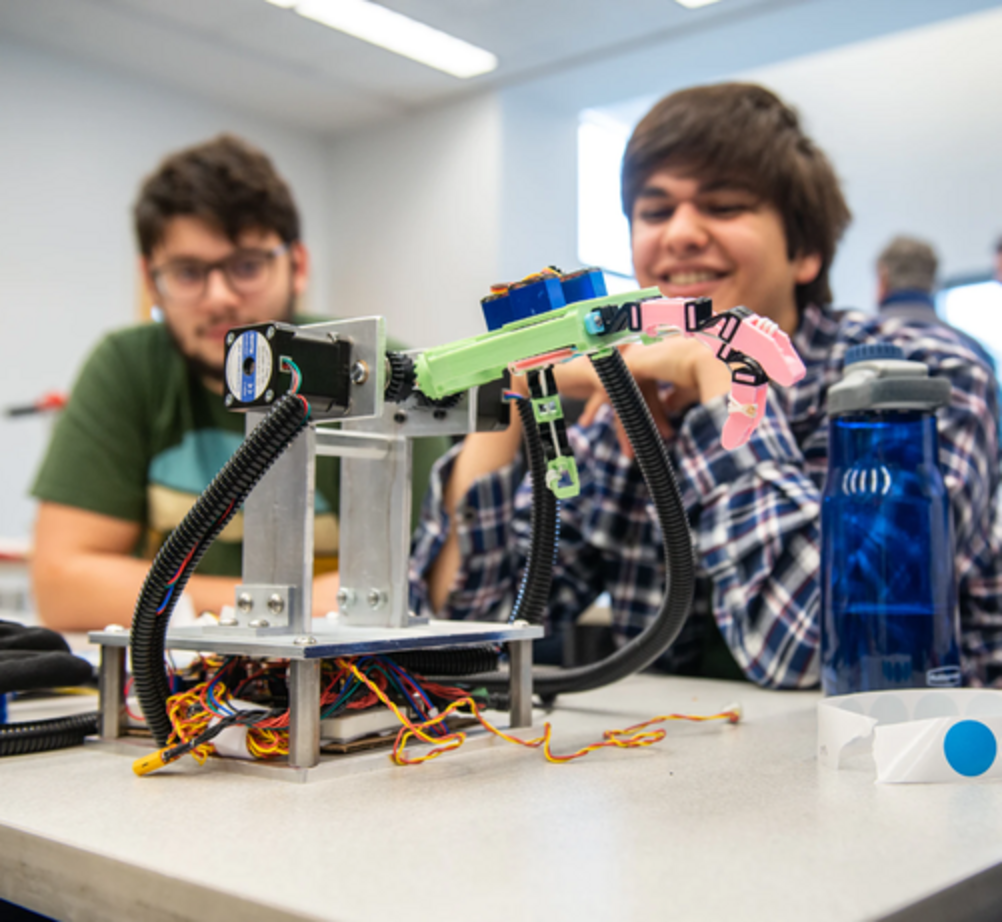

Final prototype during a demo. Left: Jamie Santiago | Right: Everardo Gonzalez (me)

### About **Collaborators:** Jasmine Kamdar, Jamie Santiago, Mason Grabowski, Mads Young **Timeline:** 7-Week Final Project for Principles of Engineering Course I worked on this project as an open-ended final project for a Principles of Engineering Course that focused on collaboration, iterative design, and systems integration. My team and I decided to design and build a compliant, telerobotic hand that could mimic the movement of a person's hand. We tackled this project according to a scrum framework prescribed by our professors where we delivered an improved version of our robot hand at the end of every 2 week sprint. We succeeded in iteratively improving our hand until we landed on our final product, which featured a wearable sensing glove to track a person's hand, and a wrist with compliant fingers that would bend along with the person's fingers. over the course of 7 weeks, with intermed I worked on this project as part of a The goal of this project was to design and build a compliant robot hand that would match the movement of a person's hand in real time. prototype a Virtual-Reality (VR) based control room for underwater Remotely Operated Vehicles (ROVs) as a potential upgrade to conventional ROV control rooms with funding from Dassault Systemés. I worked on this project as a senior at Olin College with four other senior students and a faculty advisor. We also collaborated directly with a team of pilots, scientists, and engineers at the Monterey Bay Aquarium Research Insititute (MBARI) to better understand the needs of our prototype's end users. We tested our software with MBARI's MiniROV in their one million liter saltwater test tank facility to create a final prototype capable of displaying real-time stereo-camera footage and telemetry data. This project has been included in two academic papers: **"A Virtual Reality Video System for Deep Ocean Remotely Operated Vehicles"** in IEEE OCEANS 2021, and **"Catching Jellies in Immersive Virtual Reality: A Comparative Teleoperation Study of ROVs in Underwater Capture Tasks"** in VRST 2021. The first paper details the integration of the virtual reality control room with MBARI's custom 4k fisheye stereo-camera designed specifically for operation on an ROV at extreme depths. The second discusses the results of a user study with ROV pilots that compared the effectiveness of the VR control room to a conventional control room. When pilots used the VR control room as opposed to the conventional control room, they cut their task completion time in *half*, suggesting this technology is promising for the future of ROV piloting. ### My Contributions My technical contributions included writing software for different telemetry data overlays, investigating methods for feeding stereo camera footage into the VR control room, and double checking our computer vision algorithms for dewarping and reprojecting fisheye camera footage. Other contributions included interviewing MBARI stakeholders, writing and organizing technical docs for a clean hand-over of our software to MBARI, and contributing towards external facing documentation. ### Further Reading Overview Poster